Updated June 22, 2020.

With trolls and toxicity running wild all over the internet, moderation has become practically mandatory for any publisher hoping to build and engage a profitable community.

Nearly 50% of Americans who have experienced incivility online completely remove themselves from the situation. Which means, if you allow toxicity to go unchecked on your properties, half of your audience is likely to abandon your platform if they see anything offensive.

Not to mention that Google’s starting to ban media companies with toxic comments around their content from its Ads platform. ZeroHedge, for instance, was recently banned for allowing offensive and false information to exist on its website. The Federalist also received a warning that it would be banned for the same reason if protective actions aren’t taken.

In a joint statement with other big tech companies, Google explained that it will be focusing on “helping millions of people stay connected while also jointly combating fraud and misinformation about [COVID-19], elevating authoritative content… around the world.”

Google’s global move to tighten moderation restrictions on media content comes after several countries, like France and Australia, began challenging the tech giant’s dominance over media.

If you want to keep your environment protected, moderating user interactions will help your visitors feel safe enough to engage in conversations and build relationships on your platform.

But while many engagement tool vendors claim to use fancy moderation algorithms, most of them are nothing more than ban-word lists. To protect the environment on your platform so users actually want to return, you’ll need to go beyond a simple ban-word list.

Instead, sift through the different forms of moderation so you can select a tool that will best support your community guidelines.

From more traditional user self-policing and manual moderation, to fully automated and full-service approaches, we’ve broken down the different types of moderation to help you understand what will work best for your business.

User-to-User Moderation

You know how on social media, you have the option to flag or report anything that distresses you so moderators can review? Well that’s what user-to-user moderation is — users monitoring and reporting other users for bad behavior.

As an extra layer of protection, consider giving your community the power to flag offensive or concerning posts that may slip through the cracks. Facebook moderation has some flaws and gaps when it comes to moderation, which is why you need a complete, specialized platform like Viafoura.

To minimize the amount of user-to-user moderation needed on your property, it’s important to have strong community guidelines. Read the best practices for creating community guidelines here.

Human Moderation

Moderation is an extremely complex topic that can be broken down into different forms. But here’s the problem: many publishers think that human moderation — where people manually go through users’ posts and block any offensive ones — is the only kind of moderation.

Human moderation is incredibly time-consuming and expensive on its own. Publishers who can’t afford to hire external human moderators and don’t have the time for it in-house tend to give up on building an engaged community altogether.

Sound familiar?

Consider this: when paired with an automatic solution, the need for human moderation is minimized, reducing associated time and cost investments.

The great part about human moderation is that humans are able to catch harassment or incivility that can’t always be picked up by existing algorithms as they learn. So instead of having humans do all of the work, reduce their moderation scope to focus on what technology can’t catch or understand on its own.

Read more about human vs machine moderation here: Human vs. Machine: The Moderation Wars

Automatic Moderation

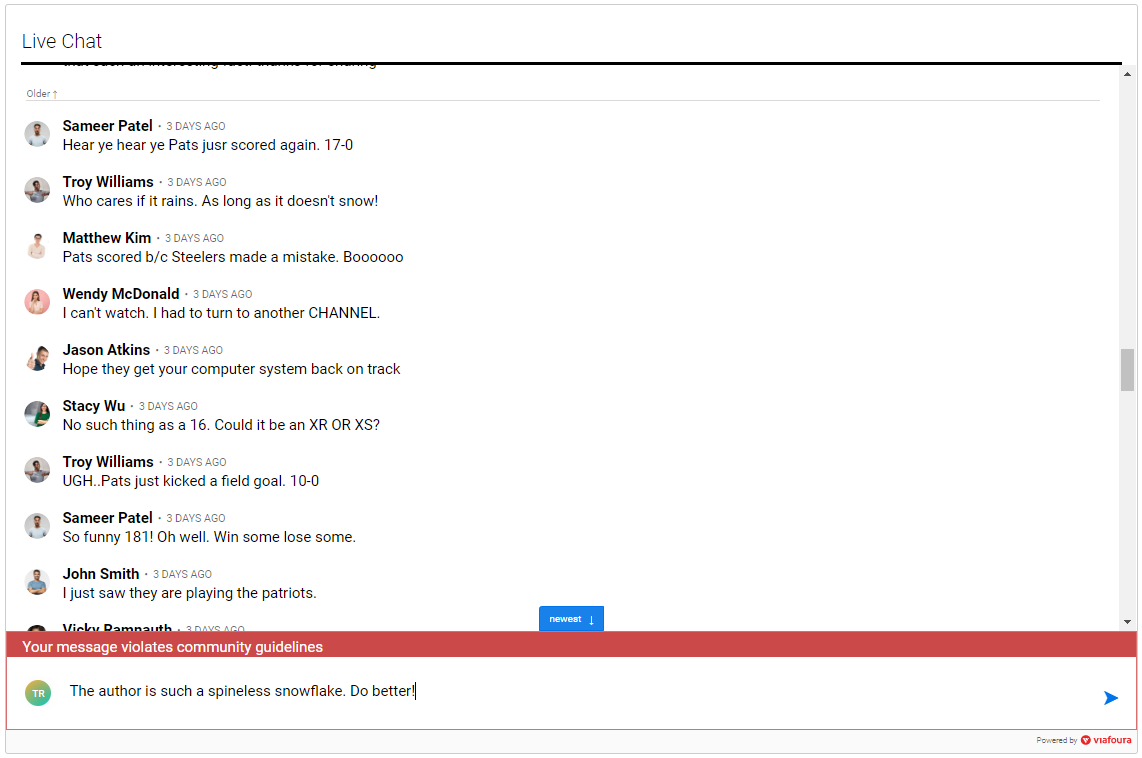

Forget simple ban-word lists. A truly effective automated moderation solution should instantly prevent the majority of toxic posts from being posted from the very second a user submits a comment.

Intelligent automatic moderation lightens the workload for human moderators by minimizing the number of comments pushed to humans, allowing them to focus on truly questionable posts, or those that are flagged by other users.

Quick tip: Many service providers claim to have AI or automatic moderation, but don’t actually leverage natural language processing or machine learning to understand variations of words and sentence structures. Check in with your moderation provider to make sure your tool can learn as moderators approve or block comments, further training the algorithm, which should be customized to your guidelines.

Full-Service Moderation

What’s a publisher to do when they just don’t have enough time on their hands to worry about moderation?

Answer: Outsource the complete solution to a service provider.

Choose a vendor that can bundle a cost-effective package for you, which should include human, sophisticated automatic and user-to-user moderation services to get the best bang for your buck.

Remember, folks: a protected community is a happy community. And that translates to revenue growth.

Hungry for more knowledge on moderation? Check out seven tips that will help your moderation team survive a national election.