Audience engagement managers know that a healthy comment section is a core component to building a healthy online community. With contentious political debates happening with greater frequency and more people turning to the internet to vent frustration, maintaining a civil yet lively comment section has become more important than ever.

Thankfully, one simple technique can help publishers and moderators guide conversations with increased peace of mind. According to a new study from Viafoura, media outlets see a significant increase in traffic, civility and engagement when publishers post the first comment.

Posting the first comment has long been a technique used by social media managers and influencers in order to control the tone of the conversation and create engagement. The first comment operates as an ‘ice-breaker’ and helps set the standard for the conversation. It also invites responses from readers, which can increase time spent on site and enhance brand loyalty.

What happens when publishers post first?

The study took place between September 28th and December 15th 2021 in 15 newsrooms across Canada covering a diverse selection of geographical regions and various ends of the political spectrum. Each newsroom posted the first comment on a number of articles across their publications, within the first hour of the articles going live. This test group was then compared to a baseline group in order to provide context to the results.

One element of the study that differed from platform to platform was the type of comment posted beneath the article. Tactics varied from newsroom to newsroom. Some editors offered users assistance, responding to particular points in the article or answering questions. Others asked specific, directed, questions about readers’ response to the article. Writers had the freedom to bring their voice and creativity to the comment section!

Over the course of the study, Viafoura collected data on volume of comments, time spent commenting and conversion before and after engagement – three crucial metrics used to evaluate the success of an online community space.

Posting first means increased engagement, conversion & peace of mind

The study found that activity increased dramatically across all metrics, with a 45% increase in time in comments, 380% increase in total average comments and a 347% increase in average likes. This drastic increase indicates that controlling the first comment sets a tone and leads to more civil discourse.

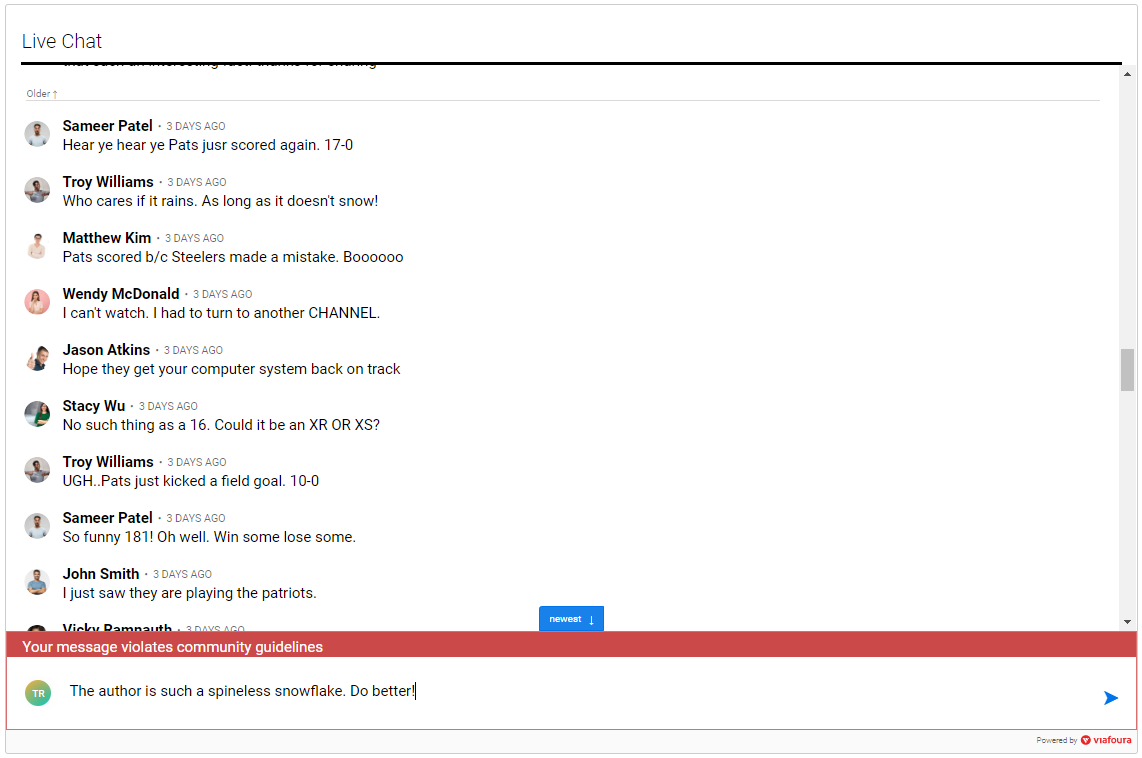

With a standard set of behavior in place, moderation needs decreased. While the baseline group was required to flag 6.8% of posts and disable 9.1% of users, the test group saw significantly reduced numbers, with 4.8% flagged and 7.4% disabled. Simply by modeling good behavior, publishers reduced the number of community guideline violations and user bans.

Perhaps the most surprising result was the significant increase in registrations.

Previous research has shown that almost 50% of members end up removing themselves from a platform when exposed to trolling. In contrast, when publishers posted the first comment, each article saw a 55% increase in registrations, with a 9% increase in users who attempted to interact with commenting before signing up.

The positive impact didn’t stop at conversion. Viafoura also saw a 21% increase in users who interacted with commenting after signup. This indicates that users participated in the discourse, felt positive enough to register, and then continued to feel engaged and loyal past the point of conversion.

This data supports the notion that posting the first comment can be a significant step in reaching target conversion goals. When publishers interact directly with their community, they help maintain a sense of safety and attention that can lead to a direct increase in engagement.

What can we conclude?

Strong customer loyalty is essential for the wellness and longevity of any online brand. Simple moderation tricks can be the difference between a contentious online conversation and a thriving online community.

By setting the tone for conversation, publishers can direct content, invite polite discourse and even tailor their engagement to suit the needs and interests of their target audience. In each case, first comments have proven to be an essential step in the process of protecting and growing digital communities.